222222

Advanced OT User

- Joined

- Jul 3, 2007

- Messages

- 223

- Reaction score

- 178

Section 1: Building and installing NGINX from source

This is an extensive tutorial I wrote together. It goes through how to build and install NGINX. I'm also talking about how to properly configure your TLS certificates for your domain name. The reason you want to build it from source is because you get the latest stable version that way. Otherwise, you risk getting an older version, which is often what's included in the apt repository.

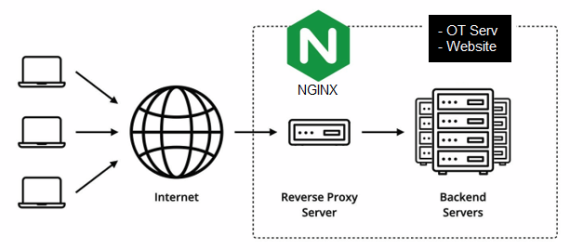

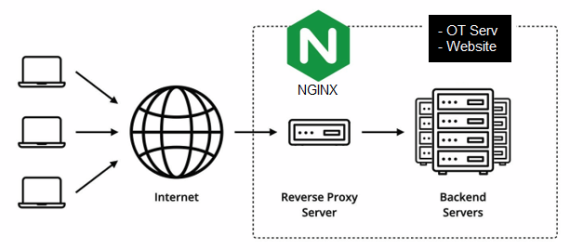

NGINX is a popular software that can be used for a lot of things. It can be used as a web server, a reverse proxy or a load balancer, just to name a few things. I recommend people to use it because it adds an extra layer of security to your server applications. It's also compatible with pretty much any type of software, whether that's an OT server, a Node.js web application or a regular website. What we want to use it for, is a reverse proxy. NGINX will sit as a middleman between the user and your OT server or website. All communication sent to/from your server will in other words be routed through NGINX. This is good because we can filter out bad actors and optimize websites for performance and ensure security headers are applied.

In this tutorial I will cover everything you need to know about NGINX. We're going to be building it from scratch so that you get the latest stable version of it. I'll also be going over how you can configure it to be as secure and performant as possible. We're going to do all of this on Linux, namely a Debian 12 server. You can follow along on other operating systems as well but there might be differences in how to do things.

Important note: I'd also like to mention that the main developer of NGINX, Maxim Dounin, recently left the NGINX project and created his own fork called FreeNGINX due to some corporate drama. It might be a good option to switch over to FreeNGINX. He made the most development and changes to NGINX over the years. He is essentially NGINX. And his FreeNGINX fork is exactly the same as regular NGINX, just that it will continue to get great updates. In this tutorial however, I'm going to be using NGINX (and not FreeNGINX). If you want to read more about FreeNGINX, you can read his statement. The process (building and securing) is exactly the same.

Now let's start by looking at the NGINX website and figure out what the latest stable version is. Since the goal is to make a secure and performant NGINX installation, we do not want to use any beta version of this software. At the time of this post, NGINX version 1.26.0 is the latest stable. Now open up your Linux server and sign in to it and we'll start building it. We will have to install some build essentials for this, so run the following to install all essential tools and download the latest stable version of NGINX.

Before we build and install NGINX, we have to setup a new system user on the server. Since this will run as a service, we do not want our regular user account to run it. Instead we want to limit the access rights and setup a custom user whose only purpose is to run the NGINX server. We will simply call the new user and its group "nginx". Run the following to add the new user:

Now let's build the installer for NGINX using all recommended modules. NGINX allows us to customize the build and include/exclude certain modules (features). Below, I've compiled the list of all modules you may need for a webserver and OT server. Run the following command to configure the installer:

Now it's time to build the installer and install NGINX. Depending on your CPU cores, adjust the number accordingly. I'm going to be using "j4" since my server has 4 CPU cores. If you have 8 for example, use "j8" to make it build faster.

To finalize the installation of NGINX, let's setup a systemd service so that we can easily start/stop/restart the server whenever we need to. We'll create a new system service called "nginx" like this:

Then paste the following into the NGINX service configuration. You can save the file using Ctrl+O → Enter → Ctrl+X.

Now all that's left is to refresh the systemd services and start NGINX.

So if you ever want to stop NGINX, simply run "sudo systemctl stop nginx" for example. Or "start" / "restart" to start or restart it.

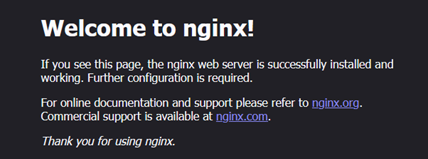

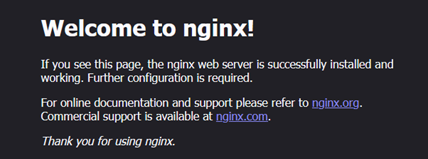

Since we started NGINX, you should now be able to see an NGINX landing page if you visit your server's IP address (or domain name, if you have one).

You have now installed the latest version of NGINX. Now let's continue by configuring it for the best security and performance. By default, it doesn't come with much security features enabled. And if you have a website domain name linked to your server, it's important that we properly setup TLS certificates in NGINX. So for now, let's close the NGINX service until it has been configured.

In Section 5 below I will talk about security and performance in NGINX.

Section 2: Installing and configuring TLS certificate in NGINX

This section of the tutorial only applies to users that have purchased a domain name and plan to put NGINX in front of your webserver (for example your OT server website). If you do not have a domain name, or do not plan to use NGINX for your web, skip this section. We will start off by configuring TLS in our domain registrar.

The most recommended domain registrar is Cloudflare, due to them offering a lot of security and performance features, such as anti-DDoS protection, and they also do not add any extra fees to domain names. So if you haven't already, I urge you to use Cloudflare for your domain names. When you make public websites these days, you have to use a TLS certificate in order to protect communication on your website. TLS was previously known as SSL ("https"). There are many different versions of TLS. What I recommend is to only allow TLS 1.2 and above. This ensures only modern devices will be compatible with your website. For users visiting it using for example Windows XP or an older browser, they will simply not be able to use it because their device is not compatible with TLS 1.2 and above.

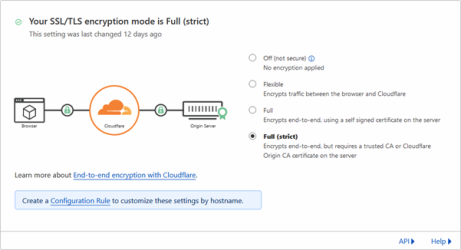

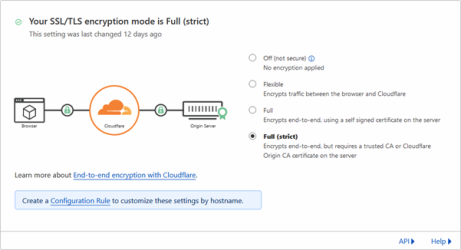

Once you have purchased your domain in Cloudflare, head to the "SSL/TLS → Overview" page in your Cloudflare dashboard and make sure you have selected to use "Full (Strict)" TLS encryption.

Then head to the "SSL/TLS → Edge Certificates" page and set the following settings to improve the overall security of your domain.

Lastly, setup DNS records so that your domain name points to your server IP address. You do that by going to the "DNS → Records" page in Cloudflare dashboard. Create two new DNS records using the following information:

We are now done configuring TLS in Cloudflare. Now let's configure TLS in NGINX. The way TLS works is that these certificates are handed out by so called "Certificate Authorities" (CA). However, Cloudflare and most other domain registrars are not CAs. Instead, they act as a middleman between you and a CA. In order to make this work properly, we have to setup a so called Origin Server Certificate. It is essentially a certificate that tells a CA that Cloudflare may create TLS certificates on behalf of you, like a contract. Some domain registrars (including Cloudflare) offers to create Origin Server Certificates via their website, but just to make sure we are the only ones with its private key, we will create one ourselves.

Now things are going to be a bit confusing, but in order to create the Origin Server Certificate, we have to generate a so called Certificate Signing Request (CSR) file and give it to Cloudflare. So in short, first we create a CSR file linked to our own private key and upload it to Cloudflare. Cloudflare will then generate an Origin Server Certificate for us. We will then upload this file to our server and that way we will get a TLS certificate. This is the best way of doing it if you want full control over the TLS of your website. It involves more steps than necessary to get TLS, but if you want to do it right, this is how it's done.

So let's start by creating a new CSR file on our server. We will place it inside "/etc/ssl".

Change "<domain>" to your domain name in the command below.

You will be asked to create a password for this CSR file. Make sure you write it down and store it somewhere safe. It will then ask a few questions about your country and such. You skip those questions by just pressing Enter. However, when it asks for a "Common Name" you must type the following (changing <domain> to your domain name).

This means that we will enable TLS not only on our domain name, but all of its subdomains as well.

After that it will ask about "Email address", "Challenge password" and "Optional company name". Leave all of them blank and skip them by pressing Enter.

You should now have two new files created, named after your domain:

Paste your CSR file password into the file and save it (Ctrl+O → Enter → Ctrl+X). Then we need to give the nginx user permission to read this file. Run the following:

At this point, I recommend you to make backups of your CSR and private key file, in case you ever lose them. They can be used to change the TLS certificate of your domain. So it's important that you do not lose them.

We are now ready to upload the CSR file to Cloudflare. In turn, they will generate an Origin Server Certificate file for us. Head to the Cloudflare dashboard and enter the "SSL/TLS → Origin Server" page and click on "Create Certificate". Select the option "Use my private key and CSR" and paste the contents of your CSR file into the form. Then in the "hostnames" field enter both of these (changing "<domain>" to your domain).

Select how long your Origin Server Certificate should be valid for. This is how long time you want to give Cloudflare permission to generate and renew TLS certificates, on behalf of you. I'm personally going with 15 years, for convenience sake. Then click on "Create" and your Origin Server Certificate file will be generated. Copy all of its content and save it on your computer, somewhere safe.

Now all you need to do is install the Origin Server Certificate on the server. Let's create its file on the server (changing "<domain>" to your domain name):

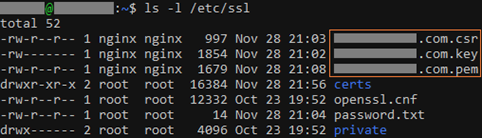

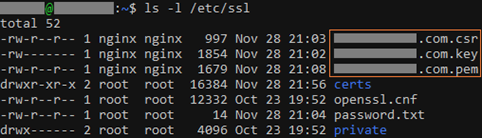

And paste in the content from the Cloudflare website into the file and save it (Ctrl+O → Enter → Ctrl+X). You should now have three files: A private key (".key"), a CSR file (".csr") and an Origin Server Certificate (".pem") all named after your domain name. E.g. "example.com.key", "example.com.csr" and "example.com.pem". You can verify that you have them by running the following:

The last thing to do here is to allow NGINX to access these files. We only want the "nginx" user to do this and nothing else on your server. Change "<domain>" to your domain name.

Section 3: Generating a Diffie-Hellman key exchange

This section only applies to users that did Section 2 in this tutorial.

A Diffie-Hellman (DH) key exchange ensures that the exchange of the TLS certificate itself is being transferred via an encrypted communication channel. While a TLS certificate encrypts communication on your website, a DH key encrypts the transfer of the TLS certificate between you and your website visitors. Unfortunately, not many people know about this and its importance in terms of security. So if you want the highest grade of encryption on your website's communication, use a DH key as a compliment to your TLS certificate.

To generate a DH key, run the following command and let it finish. It can take anywhere between 5min and 1 hour to complete, depending on your server's hardware specification. We will later on make sure our NGINX configuration loads this file.

Section 4: Creating a CAA DNS record

This section only applies to users that did Section 2 in this tutorial.

I already explained what a Certificate Authority (CA) is. But what a CAA DNS record is, is essentially a whitelist of CAs that we allow to generate TLS certificates for us. There are in fact bad CAs on the market (thankfully they get shutdown rather quickly). But to only allow "letsencrypt.org" to generate TLS certificates for our domain, we can setup a CAA DNS record in Cloudflare. This means we will only allow Lets Encrypt as a CA.

Open up the Cloudflare dashboard and create a new DNS record inside the "DNS → Records" page with the following information:

Section 5: Optimizing NGINX for security and performance

This is the most important section of this tutorial. NGINX offers us to customize a lot of settings. There are over 100 different settings that have to be adjusted if you want to properly secure and get full performance out of it. All this configuration is done inside the NGINX configuration file on your server, which you can find here:

Because there are so many settings, I have chosen to upload an optimized NGINX configuration template ("nginx.txt") attached in this tutorial. I have placed comments for everything in that file. Please read it through as you will have to fill out your domain name at some places in it (line 203-204). Once you have replaced the default NGINX configuration with the new one and saved it (using Ctrl+O → Enter → Ctrl+X) it's time to enable NGINX for autostart on server boot, and to start the service:

You have now properly setup NGINX and your TLS certificates for your website and server application. Note that it also adds Cloudflare as a CDN in front of your website, to further enhance performance and security. So whenever a user visits your website, they will route like this:

User → Cloudflare CDN → NGINX Reverse Proxy → Backend

Create as many "server" blocks as you want inside the NGINX server configuration. I included on for a website as an example, running on port 8080 (change it to whatever port your website app is running on). You can also add one for your OT server application and port 7171. Just make sure to restart the NGINX service whenever you make any changes to it.

You can then test your TLS certificate on SSL Labs website. By following this tutorial you will end up with an A+ score which is the highest rating. So not only will you get a very secure and performant NGINX installation, but you will also get the highest grade of TLS possible, for free, by following this tutorial. There is no need to purchase any TLS certificates from DigiCert for hundreds of Euros for example, just to get a better rating than what 99% of this world use. This is by far the best way to do it and should be done for any website that you host, regardless of its importance.

If you have any questions, please post them down below.

This is an extensive tutorial I wrote together. It goes through how to build and install NGINX. I'm also talking about how to properly configure your TLS certificates for your domain name. The reason you want to build it from source is because you get the latest stable version that way. Otherwise, you risk getting an older version, which is often what's included in the apt repository.

NGINX is a popular software that can be used for a lot of things. It can be used as a web server, a reverse proxy or a load balancer, just to name a few things. I recommend people to use it because it adds an extra layer of security to your server applications. It's also compatible with pretty much any type of software, whether that's an OT server, a Node.js web application or a regular website. What we want to use it for, is a reverse proxy. NGINX will sit as a middleman between the user and your OT server or website. All communication sent to/from your server will in other words be routed through NGINX. This is good because we can filter out bad actors and optimize websites for performance and ensure security headers are applied.

In this tutorial I will cover everything you need to know about NGINX. We're going to be building it from scratch so that you get the latest stable version of it. I'll also be going over how you can configure it to be as secure and performant as possible. We're going to do all of this on Linux, namely a Debian 12 server. You can follow along on other operating systems as well but there might be differences in how to do things.

Important note: I'd also like to mention that the main developer of NGINX, Maxim Dounin, recently left the NGINX project and created his own fork called FreeNGINX due to some corporate drama. It might be a good option to switch over to FreeNGINX. He made the most development and changes to NGINX over the years. He is essentially NGINX. And his FreeNGINX fork is exactly the same as regular NGINX, just that it will continue to get great updates. In this tutorial however, I'm going to be using NGINX (and not FreeNGINX). If you want to read more about FreeNGINX, you can read his statement. The process (building and securing) is exactly the same.

Now let's start by looking at the NGINX website and figure out what the latest stable version is. Since the goal is to make a secure and performant NGINX installation, we do not want to use any beta version of this software. At the time of this post, NGINX version 1.26.0 is the latest stable. Now open up your Linux server and sign in to it and we'll start building it. We will have to install some build essentials for this, so run the following to install all essential tools and download the latest stable version of NGINX.

Bash:

sudo apt update

sudo apt dist-upgrade

sudo apt install build-essential libpcre3-dev libssl-dev zlib1g-dev libgd-dev

wget http://nginx.org/download/nginx-1.26.0.tar.gz

tar -xzvf nginx-1.26.0.tar.gz

cd nginx-1.26.0Before we build and install NGINX, we have to setup a new system user on the server. Since this will run as a service, we do not want our regular user account to run it. Instead we want to limit the access rights and setup a custom user whose only purpose is to run the NGINX server. We will simply call the new user and its group "nginx". Run the following to add the new user:

Bash:

sudo adduser --system --no-create-home --shell /bin/false --disabled-login --group nginxNow let's build the installer for NGINX using all recommended modules. NGINX allows us to customize the build and include/exclude certain modules (features). Below, I've compiled the list of all modules you may need for a webserver and OT server. Run the following command to configure the installer:

Bash:

./configure --prefix=/var/www/html --sbin-path=/usr/sbin/nginx --modules-path=/etc/nginx/modules --conf-path=/etc/nginx/nginx.conf --error-log-path=/var/log/nginx/error.log --pid-path=/etc/nginx/nginx.pid --lock-path=/etc/nginx/nginx.lock --user=nginx --group=nginx --with-threads --with-file-aio --with-http_ssl_module --with-http_v2_module --with-http_realip_module --with-http_addition_module --with-http_image_filter_module=dynamic --with-http_sub_module --with-http_mp4_module --with-http_gzip_static_module --with-http_auth_request_module --with-http_secure_link_module --with-http_slice_module --with-http_stub_status_module --http-log-path=/var/log/nginx/access.log --with-stream --with-stream_ssl_module --with-stream_realip_module --with-compat --with-pcre-jitNow it's time to build the installer and install NGINX. Depending on your CPU cores, adjust the number accordingly. I'm going to be using "j4" since my server has 4 CPU cores. If you have 8 for example, use "j8" to make it build faster.

Bash:

make -j4

sudo make installTo finalize the installation of NGINX, let's setup a systemd service so that we can easily start/stop/restart the server whenever we need to. We'll create a new system service called "nginx" like this:

Bash:

sudo nano /etc/systemd/system/nginx.serviceThen paste the following into the NGINX service configuration. You can save the file using Ctrl+O → Enter → Ctrl+X.

Code:

[Unit]

Description=The NGINX HTTP and reverse proxy server

After=syslog.target network-online.target remote-fs.target nss-lookup.target

Wants=network-online.target

[Service]

Type=forking

PIDFile=/etc/nginx/nginx.pid

ExecStartPre=/usr/sbin/nginx -t

ExecStart=/usr/sbin/nginx

ExecReload=/usr/sbin/nginx -s reload

ExecStop=/bin/kill -s QUIT $MAINPID

PrivateTmp=true

[Install]

WantedBy=multi-user.targetNow all that's left is to refresh the systemd services and start NGINX.

Bash:

sudo systemctl daemon-reload

sudo systemctl start nginx

sudo systemctl status nginxSo if you ever want to stop NGINX, simply run "sudo systemctl stop nginx" for example. Or "start" / "restart" to start or restart it.

Since we started NGINX, you should now be able to see an NGINX landing page if you visit your server's IP address (or domain name, if you have one).

You have now installed the latest version of NGINX. Now let's continue by configuring it for the best security and performance. By default, it doesn't come with much security features enabled. And if you have a website domain name linked to your server, it's important that we properly setup TLS certificates in NGINX. So for now, let's close the NGINX service until it has been configured.

In Section 5 below I will talk about security and performance in NGINX.

Bash:

sudo systemctl stop nginxSection 2: Installing and configuring TLS certificate in NGINX

This section of the tutorial only applies to users that have purchased a domain name and plan to put NGINX in front of your webserver (for example your OT server website). If you do not have a domain name, or do not plan to use NGINX for your web, skip this section. We will start off by configuring TLS in our domain registrar.

The most recommended domain registrar is Cloudflare, due to them offering a lot of security and performance features, such as anti-DDoS protection, and they also do not add any extra fees to domain names. So if you haven't already, I urge you to use Cloudflare for your domain names. When you make public websites these days, you have to use a TLS certificate in order to protect communication on your website. TLS was previously known as SSL ("https"). There are many different versions of TLS. What I recommend is to only allow TLS 1.2 and above. This ensures only modern devices will be compatible with your website. For users visiting it using for example Windows XP or an older browser, they will simply not be able to use it because their device is not compatible with TLS 1.2 and above.

Once you have purchased your domain in Cloudflare, head to the "SSL/TLS → Overview" page in your Cloudflare dashboard and make sure you have selected to use "Full (Strict)" TLS encryption.

Then head to the "SSL/TLS → Edge Certificates" page and set the following settings to improve the overall security of your domain.

Code:

Always Use HTTPS: enabled

HTTP Strict Transport Security (HSTS):

- Enable HSTS (Strict-Transport-Security): enabled

- Max Age Header (max-age): 12 months

- Apply HSTS policy to subdomains (includeSubDomains): enabled

- Preload: enabled

- No-Sniff Header: enabled

Minimum TLS Version: TLS 1.2

Opportunistic Encryption: enabled

TLS 1.3: enabled

Automatic HTTPS Rewrite: enabledLastly, setup DNS records so that your domain name points to your server IP address. You do that by going to the "DNS → Records" page in Cloudflare dashboard. Create two new DNS records using the following information:

Code:

Type: A

Name: @

IPv4 address: <ipAddress of your server>

Proxy status: enabled (proxied)

Type: A

Name: www

IPv4 address: <ipAddress of your server>

Proxy status: enabled (proxied)We are now done configuring TLS in Cloudflare. Now let's configure TLS in NGINX. The way TLS works is that these certificates are handed out by so called "Certificate Authorities" (CA). However, Cloudflare and most other domain registrars are not CAs. Instead, they act as a middleman between you and a CA. In order to make this work properly, we have to setup a so called Origin Server Certificate. It is essentially a certificate that tells a CA that Cloudflare may create TLS certificates on behalf of you, like a contract. Some domain registrars (including Cloudflare) offers to create Origin Server Certificates via their website, but just to make sure we are the only ones with its private key, we will create one ourselves.

Now things are going to be a bit confusing, but in order to create the Origin Server Certificate, we have to generate a so called Certificate Signing Request (CSR) file and give it to Cloudflare. So in short, first we create a CSR file linked to our own private key and upload it to Cloudflare. Cloudflare will then generate an Origin Server Certificate for us. We will then upload this file to our server and that way we will get a TLS certificate. This is the best way of doing it if you want full control over the TLS of your website. It involves more steps than necessary to get TLS, but if you want to do it right, this is how it's done.

So let's start by creating a new CSR file on our server. We will place it inside "/etc/ssl".

Change "<domain>" to your domain name in the command below.

Bash:

cd /etc/ssl

sudo openssl req -newkey rsa:2048 -keyout <domain>.key -out <domain>.csrYou will be asked to create a password for this CSR file. Make sure you write it down and store it somewhere safe. It will then ask a few questions about your country and such. You skip those questions by just pressing Enter. However, when it asks for a "Common Name" you must type the following (changing <domain> to your domain name).

Code:

*.<domain>This means that we will enable TLS not only on our domain name, but all of its subdomains as well.

After that it will ask about "Email address", "Challenge password" and "Optional company name". Leave all of them blank and skip them by pressing Enter.

You should now have two new files created, named after your domain:

- A private key file (".key")

- A CSR file (".csr")

Bash:

sudo nano /opt/certPaste your CSR file password into the file and save it (Ctrl+O → Enter → Ctrl+X). Then we need to give the nginx user permission to read this file. Run the following:

Bash:

sudo chown nginx:nginx /opt/cert

sudo chmod 600 /opt/certAt this point, I recommend you to make backups of your CSR and private key file, in case you ever lose them. They can be used to change the TLS certificate of your domain. So it's important that you do not lose them.

We are now ready to upload the CSR file to Cloudflare. In turn, they will generate an Origin Server Certificate file for us. Head to the Cloudflare dashboard and enter the "SSL/TLS → Origin Server" page and click on "Create Certificate". Select the option "Use my private key and CSR" and paste the contents of your CSR file into the form. Then in the "hostnames" field enter both of these (changing "<domain>" to your domain).

Code:

*.<domain>

<domain>Select how long your Origin Server Certificate should be valid for. This is how long time you want to give Cloudflare permission to generate and renew TLS certificates, on behalf of you. I'm personally going with 15 years, for convenience sake. Then click on "Create" and your Origin Server Certificate file will be generated. Copy all of its content and save it on your computer, somewhere safe.

Now all you need to do is install the Origin Server Certificate on the server. Let's create its file on the server (changing "<domain>" to your domain name):

Bash:

sudo nano /etc/ssl/<domain>.pemAnd paste in the content from the Cloudflare website into the file and save it (Ctrl+O → Enter → Ctrl+X). You should now have three files: A private key (".key"), a CSR file (".csr") and an Origin Server Certificate (".pem") all named after your domain name. E.g. "example.com.key", "example.com.csr" and "example.com.pem". You can verify that you have them by running the following:

Bash:

ls -l /etc/ssl

The last thing to do here is to allow NGINX to access these files. We only want the "nginx" user to do this and nothing else on your server. Change "<domain>" to your domain name.

Code:

sudo chown nginx:nginx /etc/ssl/<domain>.*

sudo chmod 600 /etc/ssl/<domain>.key

sudo chmod 644 /etc/ssl/<domain>.csr

sudo chmod 644 /etc/ssl/<domain>.pemSection 3: Generating a Diffie-Hellman key exchange

This section only applies to users that did Section 2 in this tutorial.

A Diffie-Hellman (DH) key exchange ensures that the exchange of the TLS certificate itself is being transferred via an encrypted communication channel. While a TLS certificate encrypts communication on your website, a DH key encrypts the transfer of the TLS certificate between you and your website visitors. Unfortunately, not many people know about this and its importance in terms of security. So if you want the highest grade of encryption on your website's communication, use a DH key as a compliment to your TLS certificate.

To generate a DH key, run the following command and let it finish. It can take anywhere between 5min and 1 hour to complete, depending on your server's hardware specification. We will later on make sure our NGINX configuration loads this file.

Bash:

sudo openssl dhparam -out /etc/ssl/certs/dhparam.pem 4096Section 4: Creating a CAA DNS record

This section only applies to users that did Section 2 in this tutorial.

I already explained what a Certificate Authority (CA) is. But what a CAA DNS record is, is essentially a whitelist of CAs that we allow to generate TLS certificates for us. There are in fact bad CAs on the market (thankfully they get shutdown rather quickly). But to only allow "letsencrypt.org" to generate TLS certificates for our domain, we can setup a CAA DNS record in Cloudflare. This means we will only allow Lets Encrypt as a CA.

Open up the Cloudflare dashboard and create a new DNS record inside the "DNS → Records" page with the following information:

Code:

Type: CAA

Name: @

Flags: 0

TTL: Auto

Tag: Only allow specific hostnames

CA domain name: letsencrypt.orgSection 5: Optimizing NGINX for security and performance

This is the most important section of this tutorial. NGINX offers us to customize a lot of settings. There are over 100 different settings that have to be adjusted if you want to properly secure and get full performance out of it. All this configuration is done inside the NGINX configuration file on your server, which you can find here:

Bash:

sudo nano /etc/nginx/nginx.confBecause there are so many settings, I have chosen to upload an optimized NGINX configuration template ("nginx.txt") attached in this tutorial. I have placed comments for everything in that file. Please read it through as you will have to fill out your domain name at some places in it (line 203-204). Once you have replaced the default NGINX configuration with the new one and saved it (using Ctrl+O → Enter → Ctrl+X) it's time to enable NGINX for autostart on server boot, and to start the service:

Bash:

sudo systemctl enable nginx

sudo systemctl start nginxYou have now properly setup NGINX and your TLS certificates for your website and server application. Note that it also adds Cloudflare as a CDN in front of your website, to further enhance performance and security. So whenever a user visits your website, they will route like this:

User → Cloudflare CDN → NGINX Reverse Proxy → Backend

Create as many "server" blocks as you want inside the NGINX server configuration. I included on for a website as an example, running on port 8080 (change it to whatever port your website app is running on). You can also add one for your OT server application and port 7171. Just make sure to restart the NGINX service whenever you make any changes to it.

Bash:

sudo systemctl restart nginxYou can then test your TLS certificate on SSL Labs website. By following this tutorial you will end up with an A+ score which is the highest rating. So not only will you get a very secure and performant NGINX installation, but you will also get the highest grade of TLS possible, for free, by following this tutorial. There is no need to purchase any TLS certificates from DigiCert for hundreds of Euros for example, just to get a better rating than what 99% of this world use. This is by far the best way to do it and should be done for any website that you host, regardless of its importance.

If you have any questions, please post them down below.

Attachments

-

nginx.txt10.7 KB · Views: 17 · VirusTotal

Last edited: